|

I am a fourth-year PhD candidate at Renmin University of China, supervised by Prof. Jun He from Renmin University of China and Prof. Hongyan Liu from Tsinghua University. I'm actively seeking internship opportunities that align with my research interests. If you know of any openings or have recommendations, I'd greatly appreciate your input. My areas of focus include AI-generated content, talking head generation, and video generation. |

|

|

|

|

Ziqiao Peng, Jiwen Liu, Haoxian Zhang, Xiaoqiang Liu, Songlin Tang, Pengfei Wan, Di Zhang, Hongyan Liu, Jun He Project / arXiv We present OmniSync, a universal lip synchronization framework for diverse visual scenarios. |

|

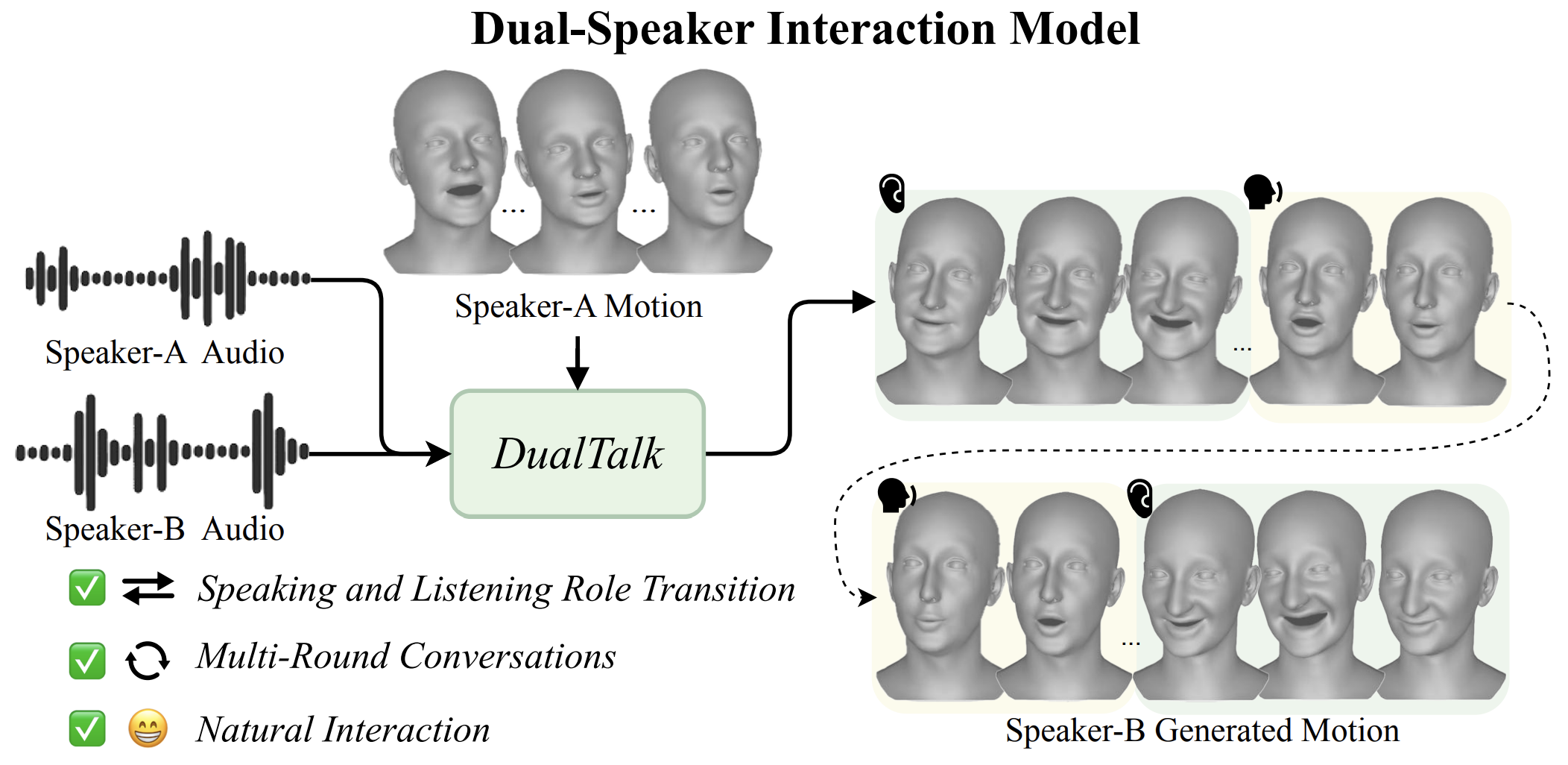

Ziqiao Peng, Yanbo Fan, Haoyu Wu, Xuan Wang, Hongyan Liu, Jun He, Zhaoxin Fan Project / arXiv We propose a new task -- multi-round dual-speaker interaction for 3D talking head generation -- which requires models to handle and generate both speaking and listening behaviors in continuous conversation. |

|

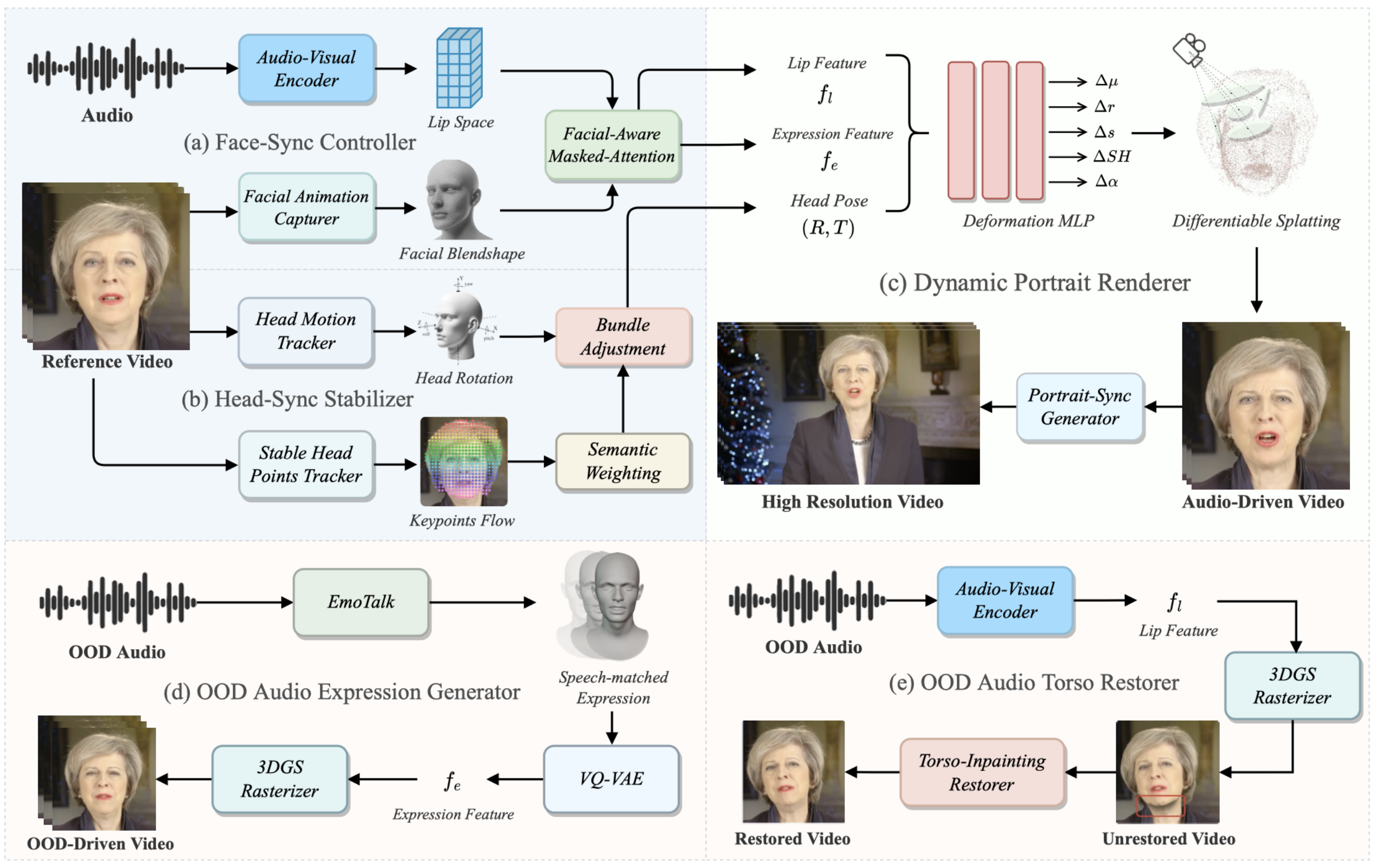

Ziqiao Peng, Wentao Hu, Junyuan Ma, Xiangyu Zhu, Xiaomei Zhang, Hao Zhao, Hui Tian, Jun He, Hongyan Liu, Zhaoxin Fan Project / arXiv We propose a 3DGS-based method to synthesis realistic talking head videos with better OOD quality. |

|

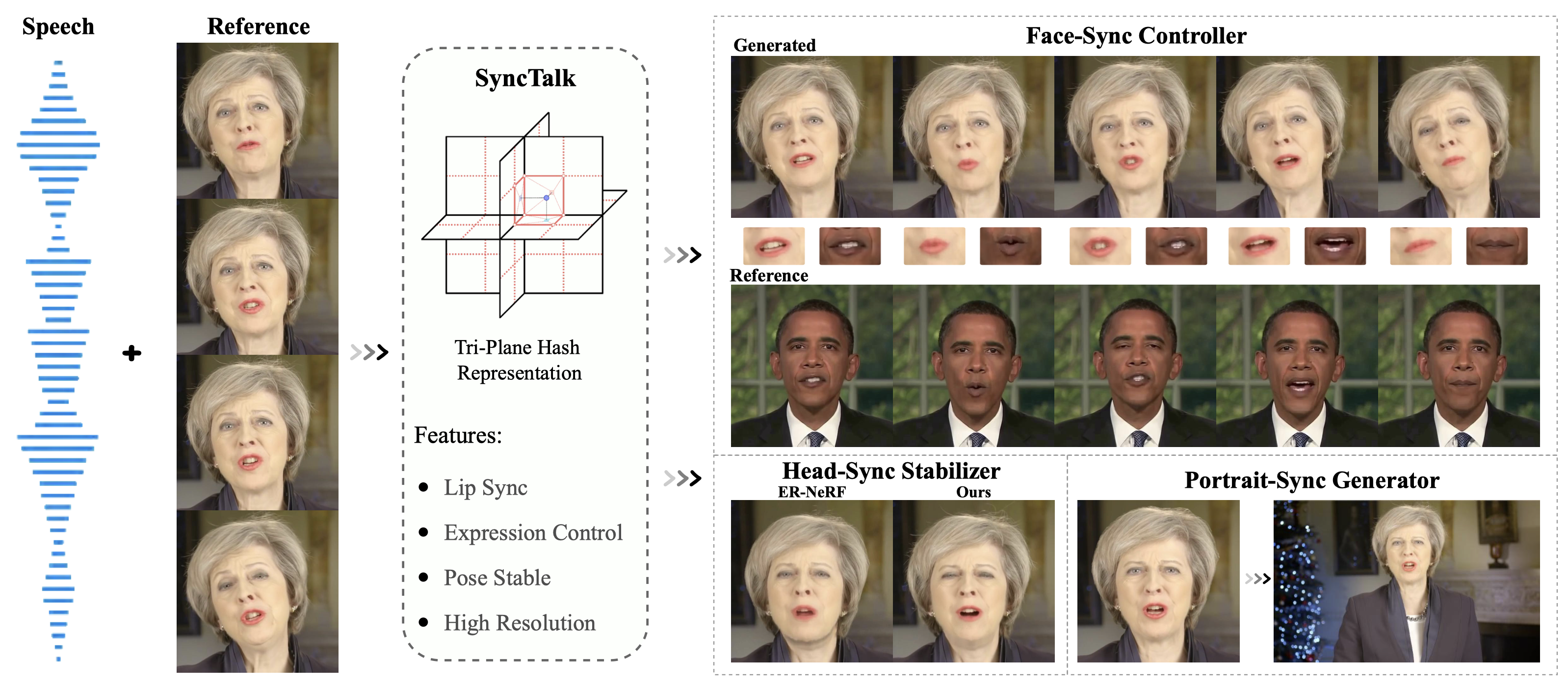

Ziqiao Peng, Wentao Hu, Yue Shi, Xiangyu Zhu, Xiaomei Zhang, Hao Zhao, Jun He, Hongyan Liu, Zhaoxin Fan Project / arXiv / Code We propose a NeRF-based method to synthesis realistic talking head videos. |

|

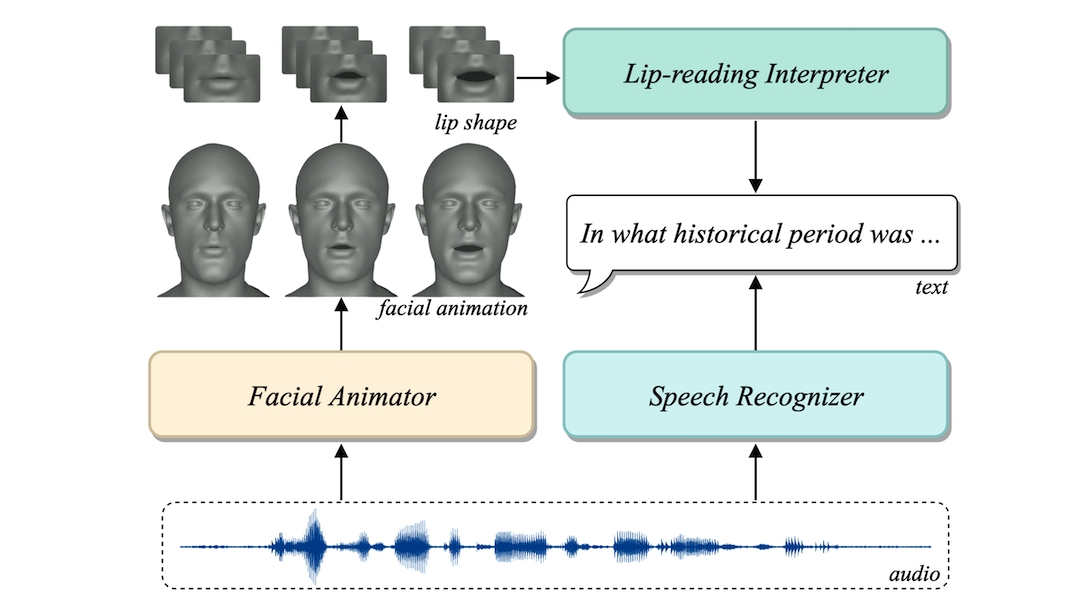

Ziqiao Peng, Yihao Luo, Yue Shi, Hao Xu, Xiangyu Zhu, Hongyan Liu, Jun He, Zhaoxin Fan Project / arXiv / Code We propose a novel framework called SelfTalk utilizes a cross-modal network system to generate coherent and visually comprehensible 3D talking faces by reducing the domain gap between different modalities. |

|

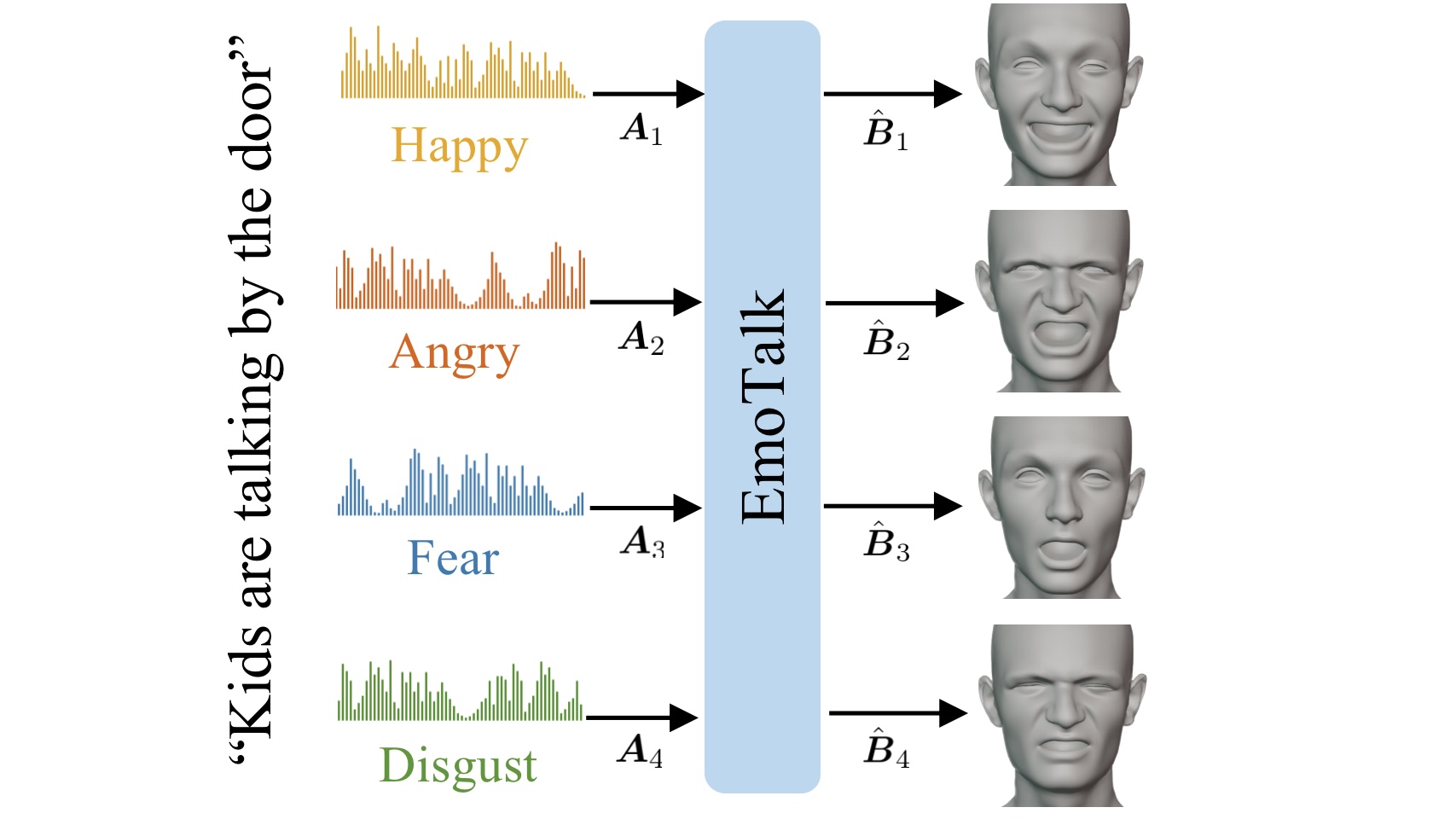

Ziqiao Peng, Haoyu Wu, Zhenbo Song, Hao Xu, Xiangyu Zhu, Hongyan Liu, Jun He, Zhaoxin Fan Project / arXiv / Code We propose an end-to-end neural network for speech-driven emotion-enhanced 3D facial animation. |

|

Conferences:

CVPR, NeurIPS, ICCV, ECCV, ACM MM, ICME, Eurographics

Journals:

IJCV, TIP, TMM, TOMM, IET Image Processing, IET Computer Vision

|